Reading Time: 15 minutes | Last Updated: January 2025

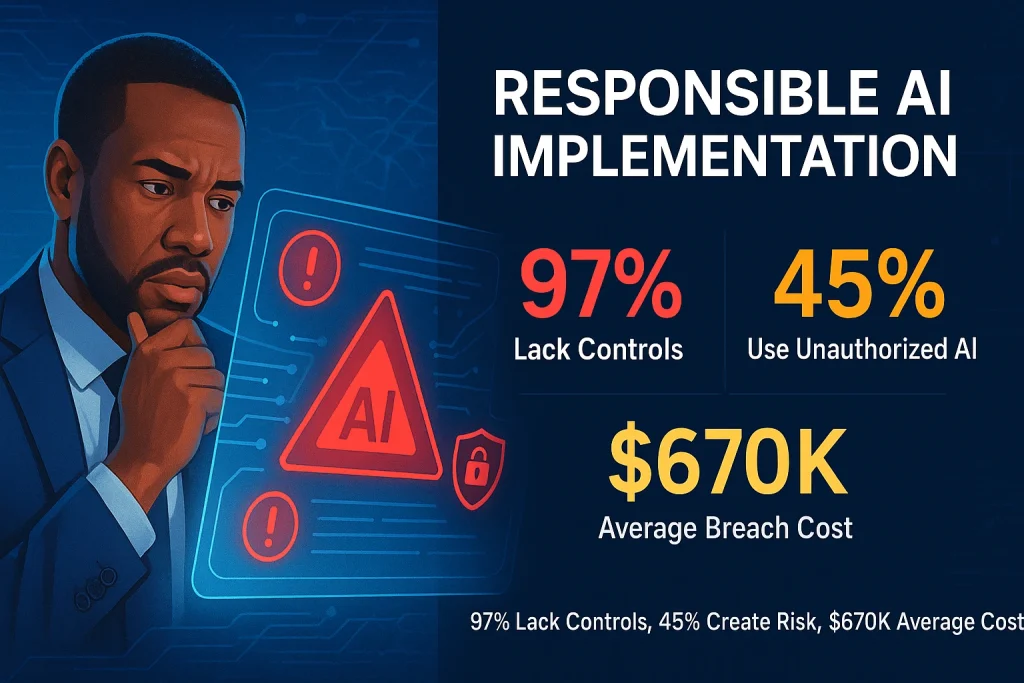

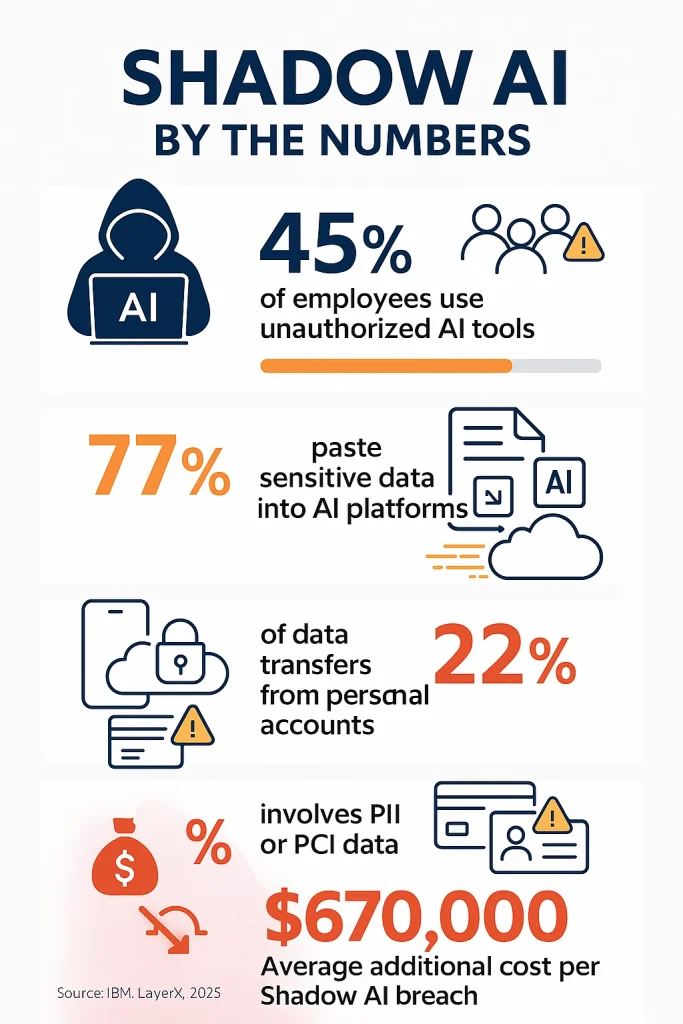

Bottom Line Up Front: Your company’s reputation isn’t at risk from AI itself—it’s at risk from how carelessly you’re implementing it. While 78% of organizations now use AI, 97% lack proper access controls, and 45% of employees are leaking sensitive data through unauthorized AI tools. The cost? An average of $670,000 per shadow AI breach, and for Fortune 500 companies, reputation damage can spiral into nine-figure territory.

Article Summary

Topic: Responsible AI implementation strategies to prevent brand reputation crises and shadow AI breaches

Main Insight: 97% of organizations lack proper AI access controls, costing an average of $670,000 per shadow AI breach. Companies need proactive governance frameworks, not reactive policies.

Expert Featured: Phillip Swan, Chief Product & Go-to-Market Officer at Iridius (The AI Solution Group), with decades of experience in customer-centric AI development and go-to-market strategies

Key Statistics:

- 45% of employees use unauthorized AI tools

- 77% paste sensitive data into AI platforms

- 78% of organizations use AI in at least one function

- Only 35% of consumers trust AI implementation

- $670,000 average additional cost for shadow AI breaches

Framework Presented: 4-pillar responsible AI approach:

- Philosophical Alignment

- Pattern Recognition Over Data Collection

- Forced Multiplication Through Alignment

- Continuous Conversation as Framework

Target Audience: C-suite executives, founders, and technology leaders at B2B tech companies (seed to Series C funding stages)

Content Source: Predictable B2B Success podcast episode featuring Phillip Swan

Practical Takeaway: Responsible AI implementation isn’t about slowing innovation—it’s about building sustainable competitive advantages through trust, transparency, and proper governance.

Meet the Expert: Phillip Swan on Responsible AI

The insights in this article come from an in-depth conversation with Phillip Swan, Chief Product and Go-to-Market Officer at Iridius (formerly The AI Solution Group), featured on the Predictable B2B Success podcast.

Phillip brings a unique perspective to responsible AI implementation, combining decades of experience in customer-centric go-to-market strategies with cutting-edge AI development. His career spans roles with Fortune 500 companies, UK and US government agencies, and now leading one of the first true agentic AI systems focused on safety and responsibility.

What makes Phillip’s approach different? He doesn’t just talk about AI governance, he’s building it from the ground up. At Iridius, his team is working toward a billion-dollar revenue goal with under 100 people, relying heavily on AI while maintaining strict ethical standards. As he explains: “We have spent this time in the last several months… really focusing in on what precisely is the customer pain point we’re looking to solve… what is it we’re going to do there and what does that mean?”

Phillip’s philosophy centers on three principles: customer obsession over customer-centricity, solving for tomorrow today, and building solid foundations that can withstand any challenge. Throughout our conversation, he emphasized that responsible AI isn’t about slowing down innovation—it’s about ensuring you’re still in business to enjoy the benefits.

The C-Suite Blind Spot That’s Costing Companies Millions

Here’s what most executives get catastrophically wrong about responsible AI implementation: they think it’s an IT problem.

It’s not.

It’s a revenue protection problem disguised as a technology issue. And the companies ignoring this reality aren’t just risking their data—they’re betting their entire brand equity on the assumption that their employees aren’t accidentally training their competitors’ AI models with proprietary information.

They’re losing that bet.

Recent data reveals that one in five organizations experienced a breach due to shadow AI, with those breaches costing an average of $670,000 more than typical incidents. But here’s what keeps me up at night: that’s just the direct cost. The real damage shows up 18-24 months later when customer trust evaporates and market share follows.

Why Traditional AI Governance Strategies Fail

Most responsible AI implementation frameworks are built backward.

They begin with compliance checklists and conclude with employee training. The problem? Sixty percent of marketers using generative AI are concerned it could harm brand reputation due to bias, plagiarism, or values misalignment—yet they’re still deploying it without proper guardrails because the C-suite mandate is “move fast.”

I’ve worked with B2B tech companies from seed stage to Series C, and I can tell you exactly where this goes wrong. The CEO announces an AI initiative. Marketing scrambles to implement. Sales starts using ChatGPT for email sequences. Product begins experimenting with code generation tools. And nobody—absolutely nobody—is coordinating these efforts with a unified governance framework.

Phillip Swan, Chief Product and Go-to-Market Officer at AI Solution Group, puts it bluntly on the Predictable B2B Success podcast: “AI by itself, if it goes unchecked, would be dangerous to humanity… We have to solve for tomorrow, today.”

That’s not hyperbole. That’s the reality most boards aren’t discussing.

The $300M Question: What Does Responsible AI Actually Mean?

Stop thinking about responsible AI implementation as a technical specification. Start thinking about it as your insurance policy against becoming the next cautionary tale in The Wall Street Journal.

Responsible AI is fundamentally about embedding ethical principles into AI applications and workflows to mitigate risks while maximizing positive outcomes. But here’s the contrarian truth nobody’s saying: most “responsible AI” programs are performative theater designed to satisfy regulators, not protect your business.

Real responsible AI implementation requires three things most companies refuse to commit to:

Executive accountability – Not your CTO’s responsibility. Not your Chief Data Officer’s responsibility. Your CEO’s responsibility. Organizations must identify roles and responsibilities of all stakeholders involved in AI development and determine who will be accountable for ensuring compliance. When Phillip Swan talks about customer obsession at AI Solution Group, he’s describing what true accountability looks like: “We founded the company together with a very aggressive goal to be a billion dollar in revenue company with under 100 people… We have to ourselves rely very heavily on AI to make ourselves optimized, productive and driving, most importantly customer outcomes.”

Cross-functional KPIs – If your sales, marketing, product, and operations teams don’t share AI-related key performance indicators, you don’t have AI governance. You have chaos with better automation. Swan emphasizes this point: “True customer centricity is where the board, the C suite, the entire C suite and their organizations have shared KPIs that are customer centric.”

Pre-awareness positioning – Most companies wait until they have an AI problem before discussing their AI principles. By then, it’s too late. Your market needs to know your stance on responsible AI implementation before they become your customers.

The Shadow AI Crisis Nobody’s Talking About

Let’s address the elephant in the server room.

Current research shows that 45% of enterprise employees are using generative AI tools without official approval, with 77% of them copying and pasting sensitive data into these platforms. Even more concerning: 82% of these data transfers originate from unmanaged personal accounts, creating massive blind spots for data leakage and compliance risks.

Your employees aren’t being malicious. They’re being productive. Or trying to be.

They’re using AI to summarize meeting notes, generate code, draft customer emails, and analyze competitive intelligence. They’re doing this because your company isn’t providing sanctioned alternatives fast enough, and they’ve got quarterly targets to hit.

The result? Data breaches involving shadow data take 26.2% longer to identify and 20.2% longer to contain, averaging 291 days. That’s almost a year of exposure before you even know you have a problem.

Swan describes the stakes perfectly:

“Companies like General Motors, Boeing… cannot afford a reputational hit due to somebody doing something silly with AI within the company. So there has to be that governance which goes beyond regulation.”

What Real Responsible AI Implementation Looks Like

Here’s what separates companies that survive the AI transition from those that become case studies in corporate negligence:

1. Treat AI Governance as a Revenue-Generating Function

Stop positioning AI governance as a cost center. Organizations that are market leaders—those outperforming their sector peers—are nearly 50% more likely to have working AI solutions in place compared to market followers.

The companies winning with responsible AI implementation aren’t the ones with the most sophisticated models. They’re the ones who figured out how to align AI capabilities with actual business outcomes while maintaining trust.

Swan’s approach at AI Solution Group demonstrates this: “We’re focusing in on what precisely is the customer pain point we’re looking to solve… really focusing in on not only compliance and regulatory, but really helping our partners and our clients drive AI governance beyond just data governance.”

2. Build Guardrails That Enable Rather Than Restrict

Most AI policies read like they were written by lawyers who’ve never used ChatGPT. They’re comprehensive, technically accurate, and completely ignored by 80% of your workforce.

Effective responsible AI implementation requires what Swan calls “true AI agents”—systems with proper entitlement and authorization frameworks. “What am I authorized to do? I can’t suddenly go into Vinay’s bank account and take out $50 and put it into Phillip’s bank account… You’ve got to put these bounds in there.”

The practical application? Organizations should embed responsible AI practices across the AI development pipeline, from data collection and model training to deployment and ongoing monitoring.

This means:

- Deploying enterprise AI versions with data privacy guarantees

- Implementing Data Loss Prevention tools tuned for AI platforms

- Creating AI security playbooks with clear escalation protocols

- Establishing regular audits for unsanctioned AI usage

3. Make AI Transparency Your Competitive Advantage

According to research, only 35% of global consumers trust how AI technology is being implemented by organizations. That gap between capability and trust? That’s your opportunity.

The companies that will dominate B2B tech in the next decade aren’t hiding their AI usage—they’re showcasing their responsible AI implementation as a differentiator.

Think about it: when you’re choosing between two SaaS vendors, and one has published white papers on their AI governance framework while the other stays silent, which one do you trust with your data?

Swan and his team at AI Solution Group are betting their entire strategy on this insight: “We are focused on being one of the first agentic systems out there… but we are also one of the first thinking about the safety and the responsibility of AI agents.”

Watch the Full Conversation: Phillip Swan on Responsible AI

Want to dive deeper into Phillip Swan’s insights on responsible AI implementation, customer-centric AI development, and building safe agentic systems? Watch the complete interview from the Predictable B2B Success podcast:

In this episode, Phillip discusses the philosophical foundations of responsible AI, the $670K cost of shadow AI breaches, and why only 7% of your organization needs to believe in AI governance to reach critical mass.

The Four Pillars Framework That Actually Works

After working with funded B2B tech startups across multiple verticals, here’s the framework that consistently prevents AI-related crises:

Pillar 1: Philosophical Alignment

Before you write a single line of AI policy, answer this: What does “good” mean for your organization’s AI usage?

Swan’s team spent months on this question: “What does good mean? What does safe mean? What does ethical mean? And really coming to terms beyond what the EU AI act or any other compliance or regulatory factor that comes into play.”

Your answer becomes your North Star for every AI decision.

Pillar 2: Pattern Recognition Over Data Collection

Security incidents involving shadow AI lead to more personally identifiable information being compromised—65% compared to the 53% global average. You can’t protect what you don’t understand.

Smart companies don’t just monitor AI usage—they harvest patterns. Swan describes their approach: “You get one solution and you harvest those patterns… Company X is doing that, Company Y is doing that. They’re doing, they’re seeing the same thing company Z is doing. And that’s the kind of patterns I’m talking about. It’s not the data, it’s literally the pattern of something.”

Pillar 3: Forced Multiplication Through Alignment

Here’s where most AI governance programs fail: they add layers of bureaucracy instead of creating force multiplication.

Research shows that 47% of organizations have experienced at least one consequence from generative AI use, yet only 34% of those with AI governance policies regularly audit for unsanctioned AI.

Swan’s solution? “Our team, which is currently of 12 people… appears a lot bigger to the market because we’re just so in sync. And that sync starts with our CEO.”

Real responsible AI implementation means your entire organization moves as one unit, with shared KPIs and daily alignment on AI usage and risks.

Pillar 4: Continuous Conversation as the Framework

Stop thinking frameworks are documents. Frameworks are living conversations.

As Swan emphasizes: “The framework is a conversation… when somebody’s talking, what you’re doing and being consistent, consistency will pay off more than anything.”

This means daily standups that include AI usage discussions, regular reviews of AI-related metrics, and transparent communication about AI failures and learnings.

The Content Strategy That Builds Pre-Awareness

Most B2B tech companies make a fatal mistake with responsible AI implementation: they treat it as an internal initiative rather than a market positioning opportunity.

Your competitors are experimenting with AI. Your prospects are worried about AI risks. Your customers are wondering if your AI will expose their data.

And you’re… silent?

Here’s what smart executives do: they leverage their responsible AI implementation journey as content that builds trust before prospects even enter their funnel.

Swan describes this as “pre-awareness”—being known for your AI stance before someone needs your solution: “Pre Awareness is I’m aware of the work that they do, but I didn’t see how it’s relevant to me. And all of a sudden now I need something that these guys might be able to help.”

For B2B tech leaders, this translates to:

- Publishing white papers on your AI governance approach

- Creating LinkedIn content that shares real AI implementation challenges

- Developing educational email courses that teach prospects about AI risks

- Building thought leadership around responsible AI that positions you as the safe choice

This isn’t marketing fluff. With 39% of young adults now getting news from platforms like TikTok and 35 million readers on Substack by 2024, reputation management requires direct-to-audience strategies.

Why Most AI Audits Miss the Real Risks

Of organizations that experienced AI-related breaches, 97% lacked proper AI access controls. However, what’s worse is that most didn’t realize they lacked them until after the breach.

Traditional security audits typically check for obvious vulnerabilities, such as unpatched systems, weak passwords, and susceptibility to phishing. They don’t check for:

- Employee use of personal AI accounts for work tasks

- Copy-paste workflows that expose sensitive data

- AI coding assistants that may reproduce proprietary code

- Third-party AI integrations that lack proper oversight

Swan identifies the core problem: “Only 7% of your organization needs to believe before you reach critical mass… You find your 7% and the rest of your organization will come along with them.”

Your responsible AI implementation needs to focus on converting that crucial 7%—usually your most productive, most tech-savvy employees—into AI governance champions, not enforcement targets.

The $670,000 Mistake You’re About to Make

Let me save you some money and embarrassment.

You’re probably about to implement a “no unauthorized AI” policy that will be ignored by half your workforce within 30 days. The data is clear: organizations with high levels of shadow AI observe an average of $670,000 in higher breach costs.

But banning AI doesn’t reduce shadow AI. It just makes it more hidden.

The alternative? Implement regulatory frameworks that establish firm standards for data privacy and security while enabling innovation. Give employees sanctioned alternatives that match the convenience of personal AI tools.

This is where many B2B tech companies stumble. They recognize the need for enterprise AI solutions, but implementing them across marketing, sales, product, and operations feels overwhelming.

Swan’s advice resonates here: “Everything’s going to be on shaky foundations when you do that. I’m a big believer in having a solid concrete foundation that I can build any structure on top of. And even when the Category 5 hurricane comes blown across, the structure still stands.”

Building Total Organizational Revenue Momentum Through AI Trust

Here’s the connection most executives miss: responsible AI implementation isn’t just about risk mitigation—it’s about accelerating innovation and realizing increased value from AI itself.

When customers trust your AI governance, several things happen:

Deal Velocity Increases – Security reviews that used to take 90 days take 30 when you can demonstrate mature AI governance.

Customer Expansion Accelerates – Existing customers deploy your AI features faster when they trust your implementation.

Win Rates Improve – In competitive deals, responsible AI implementation becomes a differentiator.

Valuation Multiples Expand – VCs and acquirers pay premiums for companies with demonstrated AI governance.

Swan frames this as “irreversible momentum”—the point where your AI practices become a compounding advantage: “Tides return and when tides turned, there’s no going back… Sometimes turn. Tides turn for the better, sometimes they don’t.”

What to Do Tomorrow Morning

Forget comprehensive 18-month AI transformation roadmaps. Start with these actions tomorrow:

9:00 AM – Email your executive team: “What AI tools is your department using, and have they been vetted by IT/Security?” The answers will terrify you. Good. That’s your baseline.

10:00 AM – Create an anonymous survey for employees asking what AI tools they use for work. Promise no punishment. Most shadow AI risks stem from a lack of awareness rather than malicious intent.

11:00 AM – Schedule a 30-minute meeting with your CTO, CISO, and legal counsel to review AI-related compliance requirements for your industry.

2:00 PM – Start drafting your company’s AI principles. Not policies—principles. What do you stand for? What won’t you compromise on?

3:00 PM – Identify three employees who are AI enthusiasts. Make them your AI governance champions, not your enforcement targets.

4:00 PM – Research enterprise AI alternatives to the consumer tools your team is using. ChatGPT Team. Claude for Enterprise. Microsoft Copilot. Compare data handling policies.

5:00 PM – Block 30 minutes on your calendar for next week to review progress and adjust.

The Content Opportunity You’re Missing

While you’re building your responsible AI implementation framework, you should be documenting the journey publicly.

Organizations must create structured, authoritative content specifically formatted for AI crawlers while maintaining consistent messaging across owned platforms.

This serves two purposes:

- Builds market trust before prospects need your solution

- Creates a content moat that positions you as the responsible AI leader in your space

Most B2B tech CEOs I work with are sitting on incredible insights about AI implementation but aren’t sharing them. They’re worried about revealing competitive advantages or admitting mistakes.

That’s backwards thinking.

Swan proves this with AI Solution Group’s approach: “We are publishing white papers and really thinking about forward thinking about how do you actually… what is an AI agent?… We’ve been interviewing other people in industry, we’ve been interviewing ethicists, we’ve been interviewing humanists.”

The companies that openly document their AI governance journey—the failures, pivots, and lessons learned—build trust that no marketing budget can buy.

Why This Matters More Than Your Q4 Numbers

Let me close with an uncomfortable truth: in 2024, while AI business usage reached 78% of organizations—up from 55% the year before—AI-related incidents are rising sharply, yet standardized responsible AI evaluations remain rare among major industrial model developers.

Translation: Everyone’s racing forward, almost nobody’s looking down to see if the bridge is still there.

Your Q4 revenue targets matter. Your Series B fundraising matters. Your product roadmap matters.

However, none of this matters if a careless AI implementation destroys your brand reputation and customer trust. Lost business and reputation damage from AI-related breaches average $1.47 million and represent the majority of the increase in breach costs.

Swan’s perspective cuts through the noise: “Businesses exist to make a profit… Nothing should stand in the way of that other than ethics and compliance… But there are many people and organizations that think that if you don’t believe in what I believe, you’re the enemy. We’re either in this together or we’re not.”

Responsible AI implementation isn’t about slowing down your AI adoption; it’s about ensuring it’s done right. It’s about ensuring you’re still in business to enjoy the benefits.

The Bottom Line

The companies that will dominate the next decade of B2B tech aren’t the ones with the most advanced AI. They’re the ones customers trust with AI.

Building that trust requires:

- Executive accountability for AI governance

- Transparent communication about AI usage

- Sanctioned alternatives to shadow AI

- Continuous monitoring and adaptation

- Public documentation of your AI principles

Most importantly, it requires treating responsible AI implementation not as a compliance burden, but as your competitive moat.

Because when your competitors are dealing with $670,000 shadow AI breaches and nine-figure reputation crises, you’ll be closing deals and expanding accounts.

That’s not fear-mongering.

That’s strategy.

Related Resources: AI & B2B Growth from the Predictable B2B Success Podcast

If you found Phillip Swan’s insights valuable, explore these related episodes from the Predictable B2B Success podcast that dive deeper into AI, automation, and B2B growth strategies:

AI & Technology Episodes

5 Keys to Embrace AI in Podcast Production and Drive Growth – Mark Savant discusses integrating AI into content production, ethical implications, and avoiding job displacement while leveraging AI tools effectively.

Podcast Automation: How to Save Time And Drive Growth – Edward Brower reveals how Podcast AI’s MagicPod automates entire podcast creation while maintaining quality and personalization at scale.

Strategy & Customer Success Episodes

How to Create Powerful Sales Narratives That Drive Growth – Bruce Scheer explores crafting compelling sales stories that resonate with decision-makers and align go-to-market efforts.

$1M Teamwork Insights For High-Performing Teams From Experts – Margie Oleson shares strategies for developing commitment, accountability, and trust in teams navigating AI transformation.

How to Drive Growth With Email Deliverability – Ed Forteau provides insights on building relationships, trust-based selling, and navigating the technical challenges of communication.

Content & Marketing Strategy

How to Use a Podcasting Strategy to Skyrocket Revenue Growth – James Mulvaney discusses leveraging podcasts for customer acquisition, building authority, and creating pre-awareness.

Brand Credibility: How to Build it and Drive Growth – Learn how to demonstrate competence, empathy, and authenticity to build trust—critical for responsible AI positioning.

What is Customer Empathy and Why It Drives Customer Service – Understanding customer needs is fundamental to implementing AI that serves rather than alienates your audience.

Connect With Phillip Swan

Want to learn more from Phillip Swan about responsible AI implementation and agentic systems?

Professional Profile:

- LinkedIn – Connect with Phillip and subscribe to his weekly newsletter “The Go To Market Edge” where he writes about AI, go-to-market strategies, and implementation challenges

Company:

- Iridius (The AI Solution Group) – Enterprise-grade agentic AI solutions with compliance built-in, helping organizations build safe and responsible AI systems

Phillip regularly shares thought leadership on building AI systems that are safe, responsible, and actually solve customer problems. His approach combines technical expertise with a deep understanding of customer-centric business strategy.

Some areas we explore in this episode include:

Listen to the episode.

Subscribe to & Review the Predictable B2B Success Podcast

Thanks for tuning into this week’s Predictable B2B Podcast episode! If the information from our interviews has helped your business journey, please visit Apple Podcasts, subscribe to the show, and leave us an honest review.

Your reviews and feedback will not only help me continue to deliver great, helpful content but also help me reach even more amazing founders and executives like you!